20170126 big data processing

- 1. Big Data Processing January 2017

- 2. • Data Architect at unbelievable machine Company • Software Engineering Background • Jack of all Trades who also dives into Business topics, Systems Engineering and Data Science. • Big Data since 2011 • Cross-Industry: From Automotive to Transportation • Other Activities • Trainer: Hortonworks Apache Hadoop Certified Trainer • Author: Articles and book projects • Lector: Big Data at FH Technikum and FH Wiener Neustadt Stefan Papp

- 3. Agenda • Big Data Processing • Evolution in Processing Big Data • Data Processing Patterns • Components of a Data Processing Engine • Apache Spark • Concept • Ecosystem • Apache Flink • Concept • Ecosystem

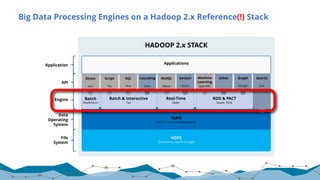

- 4. Big Data Processing Engines on a Hadoop 2.x Reference(!) Stack HADOOP 2.x STACK HDFS (redundant, reliable storage) YARN (cluster resource management) Batch MapReduce Direct Java Search Solr API Engine Data Operating System File System Batch & Interactive Tez Script Pig SQL Hive Cascading Java Real-Time Slider NoSQL HBase Stream Storm RDD & PACT Spark, Flink Machine Learning SparkML Other Application Graph Giraph Applications

- 6. Big Data Roots – Content Processing for Search Engines

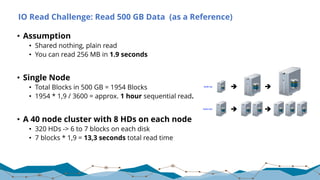

- 7. IO Read Challenge: Read 500 GB Data (as a Reference) • Assumption • Shared nothing, plain read • You can read 256 MB in 1.9 seconds • Single Node • Total Blocks in 500 GB = 1954 Blocks • 1954 * 1,9 / 3600 = approx. 1 hour sequential read. • A 40 node cluster with 8 HDs on each node • 320 HDs -> 6 to 7 blocks on each disk • 7 blocks * 1,9 = 13,3 seconds total read time

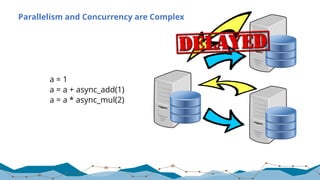

- 8. Parallelism and Concurrency are Complex a = 1 a = a + async_add(1) a = a * async_mul(2)

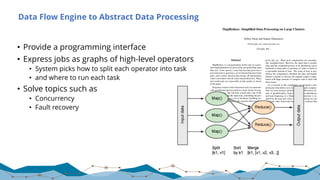

- 9. Data Flow Engine to Abstract Data Processing • Provide a programming interface • Express jobs as graphs of high-level operators • System picks how to split each operator into task • and where to run each task • Solve topics such as • Concurrency • Fault recovery

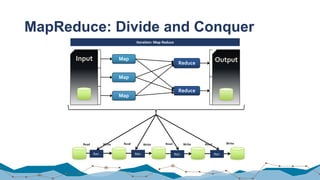

- 10. MapReduce: Divide and Conquer Map Map Map Reduce Reduce Input Output Read Read Read ReadWrite Write Write Write Iter. Iter. Iter. Iter. Iteration: Map Reduce

- 12. Evolution of Data Processing 2004 2007 2010 2010

- 13. Data Processing Patterns Classification and Processing Patterns

- 14. Batch Processing 14 Source Source Storage Layer Periodic ingestion Batch Processor Periodic analysis job Consumer Job scheduler SQL Import File Import

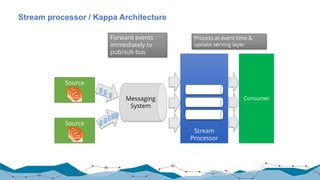

- 15. Stream processor / Kappa Architecture 15 Source Source Consumer Forward events immediately to pub/sub bus Stream Processor Process at event time & update serving layer Messaging System

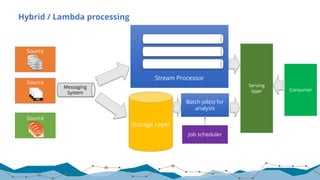

- 16. Hybrid / Lambda processing 16 Storage Layer Batch job(s) for analysis Serving layer Job scheduler Stream Processor Messaging System Consumer Source Source Source

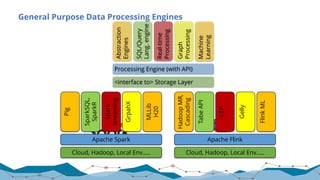

- 19. General Purpose Data Processing Engines Processing Engine (with API) Abstraction Engines SQL/Query Lang.engine Real-time Processing Machine Learning Graph Processing <interface to> Storage Layer Apache Spark Pig SparkSQL, SparkR Spark Streaming MLLib H20 GrpahX Cloud, Hadoop, Local Env….. Apache Flink HadoopMR, Cascading TabeAPI CEP FlinkML Gelly Cloud, Hadoop, Local Env…..

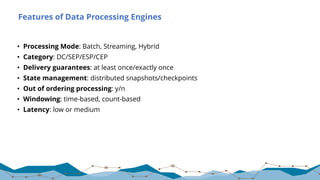

- 20. Features of Data Processing Engines • Processing Mode: Batch, Streaming, Hybrid • Category: DC/SEP/ESP/CEP • Delivery guarantees: at least once/exactly once • State management: distributed snapshots/checkpoints • Out of ordering processing: y/n • Windowing: time-based, count-based • Latency: low or medium

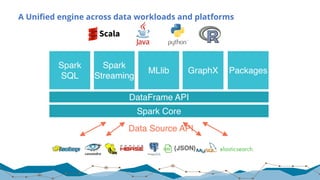

- 22. A Unified engine across data workloads and platforms

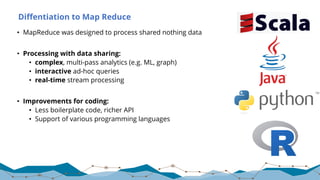

- 23. Diffentiation to Map Reduce • MapReduce was designed to process shared nothing data • Processing with data sharing: • complex, multi-pass analytics (e.g. ML, graph) • interactive ad-hoc queries • real-time stream processing • Improvements for coding: • Less boilerplate code, richer API • Support of various programming languages

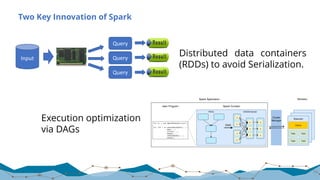

- 24. Two Key Innovation of Spark 24 Execution optimization via DAGs Distributed data containers (RDDs) to avoid Serialization. Query Input Query Query

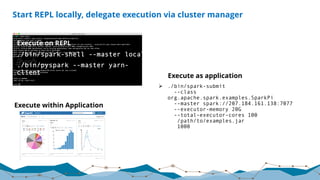

- 26. Start REPL locally, delegate execution via cluster manager Execute on REPL Ø ./bin/spark-shell --master local Ø ./bin/pyspark --master yarn- client Execute as application Ø ./bin/spark-submit --class org.apache.spark.examples.SparkPi --master spark://207.184.161.138:7077 --executor-memory 20G --total-executor-cores 100 /path/to/examples.jar 1000 Execute within Application

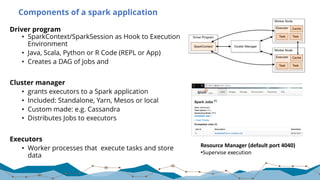

- 27. Components of a spark application Driver program • SparkContext/SparkSession as Hook to Execution Environment • Java, Scala, Python or R Code (REPL or App) • Creates a DAG of Jobs and Cluster manager • grants executors to a Spark application • Included: Standalone, Yarn, Mesos or local • Custom made: e.g. Cassandra • Distributes Jobs to executors Executors • Worker processes that execute tasks and store data Resource Manager (default port 4040) •Supervise execution

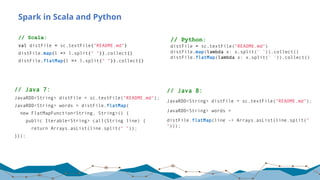

- 29. Spark in Scala and Python // Scala: val distFile = sc.textFile("README.md") distFile.map(l => l.split(" ")).collect() distFile.flatMap(l => l.split(" ")).collect() 29 // Python: distFile = sc.textFile("README.md") distFile.map(lambda x: x.split(' ')).collect() distFile.flatMap(lambda x: x.split(' ')).collect() // Java 7: JavaRDD<String> distFile = sc.textFile("README.md"); JavaRDD<String> words = distFile.flatMap( new FlatMapFunction<String, String>() { public Iterable<String> call(String line) { return Arrays.asList(line.split(" ")); }}); // Java 8: JavaRDD<String> distFile = sc.textFile("README.md"); JavaRDD<String> words = distFile.flatMap(line -> Arrays.asList(line.split(" ")));

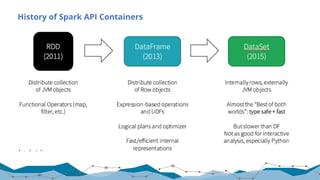

- 30. History of Spark API Containers

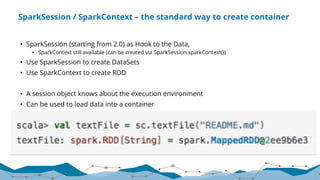

- 31. SparkSession / SparkContext – the standard way to create container • SparkSession (starting from 2.0) as Hook to the Data, • SparkContext still available (can be created via SparkSession.sparkContext()) • Use SparkSession to create DataSets • Use SparkContext to create RDD • A session object knows about the execution environment • Can be used to load data into a container

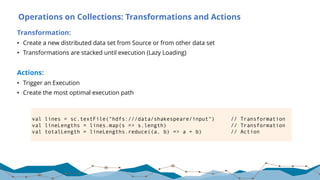

- 32. Operations on Collections: Transformations and Actions val lines = sc.textFile("hdfs:///data/shakespeare/input") // Transformation val lineLengths = lines.map(s => s.length) // Transformation val totalLength = lineLengths.reduce((a, b) => a + b) // Action Transformation: • Create a new distributed data set from Source or from other data set • Transformations are stacked until execution (Lazy Loading) Actions: • Trigger an Execution • Create the most optimal execution path

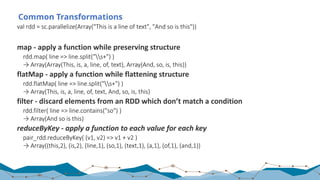

- 33. Common Transformations val rdd = sc.parallelize(Array("This is a line of text", "And so is this")) map - apply a function while preserving structure rdd.map( line => line.split("s+") ) → Array(Array(This, is, a, line, of, text), Array(And, so, is, this)) flatMap - apply a function while flattening structure rdd.flatMap( line => line.split("s+") ) → Array(This, is, a, line, of, text, And, so, is, this) filter - discard elements from an RDD which don’t match a condition rdd.filter( line => line.contains("so") ) → Array(And so is this) reduceByKey - apply a function to each value for each key pair_rdd.reduceByKey( (v1, v2) => v1 + v2 ) → Array((this,2), (is,2), (line,1), (so,1), (text,1), (a,1), (of,1), (and,1))

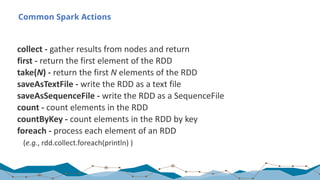

- 34. Common Spark Actions collect - gather results from nodes and return first - return the first element of the RDD take(N) - return the first N elements of the RDD saveAsTextFile - write the RDD as a text file saveAsSequenceFile - write the RDD as a SequenceFile count - count elements in the RDD countByKey - count elements in the RDD by key foreach - process each element of an RDD (e.g., rdd.collect.foreach(println) )

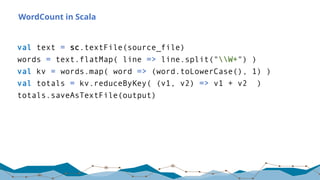

- 35. WordCount in Scala val text = sc.textFile(source_file) words = text.flatMap( line => line.split("W+") ) val kv = words.map( word => (word.toLowerCase(), 1) ) val totals = kv.reduceByKey( (v1, v2) => v1 + v2 ) totals.saveAsTextFile(output)

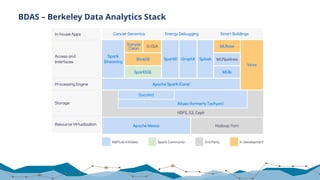

- 37. BDAS – Berkeley Data Analytics Stack

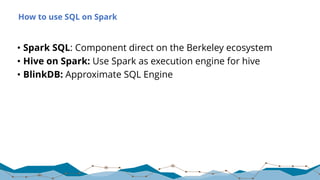

- 38. How to use SQL on Spark • Spark SQL: Component direct on the Berkeley ecosystem • Hive on Spark: Use Spark as execution engine for hive • BlinkDB: Approximate SQL Engine

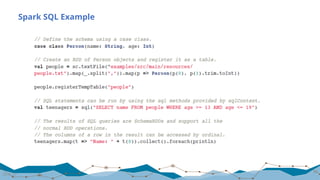

- 39. Spark SQL Spark SQL uses DataFrames (Typed Data Containers) for SQL Hive: c = HiveContext(sc) rows = c.sql(“select * from titanic”) rows.filter(rows[‘age’] > 25).show() JSON: c.read.format(‘json’).load(’file:///root/tweets.json”).registerTe mpTable(“tweets”) c.sql(“select text, user.name from tweets”) 39 28.01.17

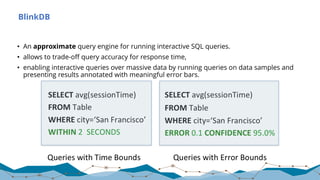

- 41. BlinkDB • An approximate query engine for running interactive SQL queries. • allows to trade-off query accuracy for response time, • enabling interactive queries over massive data by running queries on data samples and presenting results annotated with meaningful error bars.

- 42. Streaming Spark Streaming and Structured Streaming

- 43. Spark Streaming Streaming on RDDs Structured Streaming Streaming on DataFrames

- 44. Machine Learning and Graph Analytics SparkML, Spark MLLib, GraphX, GraphFrames,

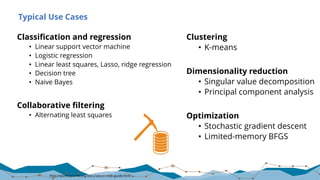

- 45. Typical Use Cases Classification and regression • Linear support vector machine • Logistic regression • Linear least squares, Lasso, ridge regression • Decision tree • Naive Bayes Collaborative filtering • Alternating least squares Clustering • K-means Dimensionality reduction • Singular value decomposition • Principal component analysis Optimization • Stochastic gradient descent • Limited-memory BFGS http://spark.apache.org/docs/latest/mllib-guide.html

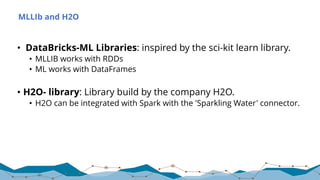

- 46. MLLIb and H2O • DataBricks-ML Libraries: inspired by the sci-kit learn library. • MLLIB works with RDDs • ML works with DataFrames • H2O- library: Library build by the company H2O. • H2O can be integrated with Spark with the 'Sparkling Water' connector.

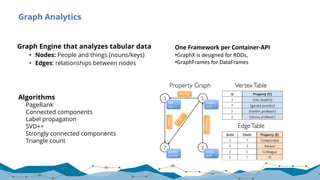

- 47. Graph Analytics Graph Engine that analyzes tabular data • Nodes: People and things (nouns/keys) • Edges: relationships between nodes Algorithms PageRank Connected components Label propagation SVD++ Strongly connected components Triangle count One Framework per Container-API •GraphX is designed for RDDs, •GraphFrames for DataFrames

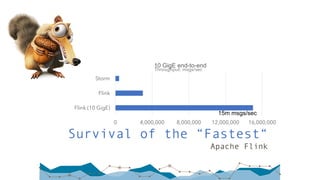

- 48. Survival of the “Fastest“ Apache Flink 0 4,000,000 8,000,000 12,000,000 16,000,000 Storm Flink Flink (10 GigE) Throughput: msgs/sec 10 GigE end-to-end 15m msgs/sec

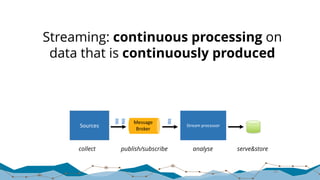

- 49. 49 Streaming: continuous processing on data that is continuously produced Sources Message Broker Stream processor collect publish/subscribe analyse serve&store

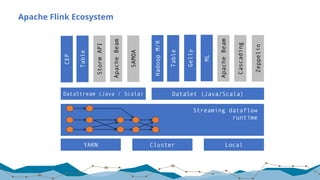

- 50. Apache Flink Ecosystem 50 Gelly Table ML SAMOA DataSet (Java/Scala)DataStream (Java / Scala) HadoopM/R LocalClusterYARN ApacheBeam ApacheBeam Table Cascading Streaming dataflow runtime StormAPI Zeppelin CEP

- 51. Expressive APIs 51 case class Word (word: String, frequency: Int) val lines: DataStream[String] = env.fromSocketStream(...) lines.flatMap {line => line.split(" ") .map(word => Word(word,1))} .window(Time.of(5,SECONDS)).every(Time.of(1,SECONDS)) .groupBy("word").sum("frequency") .print() val lines: DataSet[String] = env.readTextFile(...) lines.flatMap {line => line.split(" ") .map(word => Word(word,1))} .groupBy("word").sum("frequency") .print() DataSet API (batch): DataStream API (streaming):

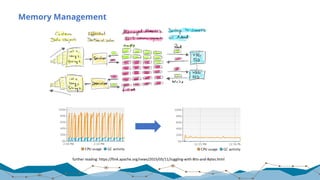

- 52. Flink Engine – Core Design Principles 1. Execute everything as streams 2. Allow some iterative (cyclic) dataflows 3. Allow some (mutable) state 4. Operate on managed memory

- 55. Windowing Apache Flink Low latency High throughput State handling Windowing Fault tolerance and correctness

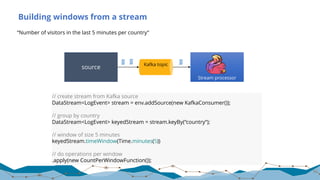

- 56. Building windows from a stream “Number of visitors in the last 5 minutes per country” 56 source Kafka topic Stream processor // create stream from Kafka source DataStream<LogEvent> stream = env.addSource(new KafkaConsumer()); // group by country DataStream<LogEvent> keyedStream = stream.keyBy(“country“); // window of size 5 minutes keyedStream.timeWindow(Time.minutes(5)) // do operations per window .apply(new CountPerWindowFunction());

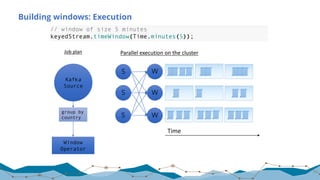

- 57. Building windows: Execution 57 Kafka Source Window Operator S S S W W W group by country // window of size 5 minutes keyedStream.timeWindow(Time.minutes(5)); Job plan Parallel execution on the cluster Time

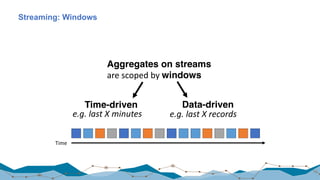

- 58. Streaming: Windows 58 Time Aggregates on streams are scoped by windows Time-driven Data-driven e.g. last X minutes e.g. last X records

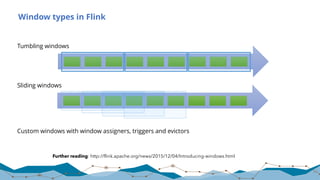

- 59. Window types in Flink Tumbling windows Sliding windows Custom windows with window assigners, triggers and evictors 59 Further reading: http://flink.apache.org/news/2015/12/04/Introducing-windows.html

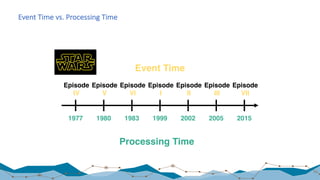

- 60. 1977 1980 1983 1999 2002 2005 2015 Processing Time Episode IV Episode V Episode VI Episode I Episode II Episode III Episode VII Event Time Event Time vs. Processing Time 60

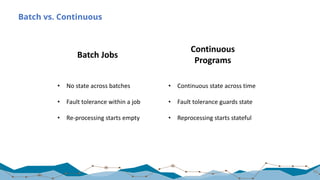

- 62. Batch vs. Continuous 62 • No state across batches • Fault tolerance within a job • Re-processing starts empty Batch Jobs Continuous Programs • Continuous state across time • Fault tolerance guards state • Reprocessing starts stateful

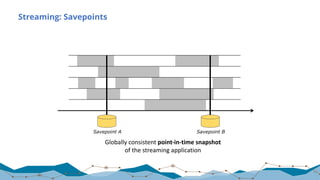

- 63. Streaming: Savepoints 63 Savepoint A Savepoint B Globally consistent point-in-time snapshot of the streaming application

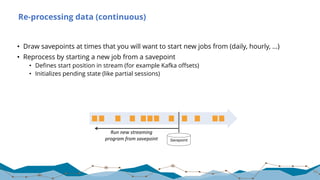

- 64. Re-processing data (continuous) • Draw savepoints at times that you will want to start new jobs from (daily, hourly, …) • Reprocess by starting a new job from a savepoint • Defines start position in stream (for example Kafka offsets) • Initializes pending state (like partial sessions) 64 Savepoint Run new streaming program from savepoint

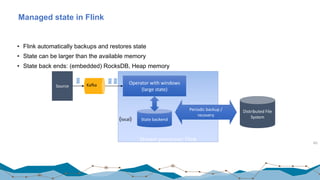

- 65. Stream processor: Flink Managed state in Flink • Flink automatically backups and restores state • State can be larger than the available memory • State back ends: (embedded) RocksDB, Heap memory 65 Operator with windows (large state) State backend(local) Distributed File System Periodic backup / recovery Source Kafka

- 66. Fault Tolerance Apache Flink Low latency High throughput State handlingWindowing 7 Fault tolerance and correctness

- 67. Fault tolerance in streaming • How do we ensure the results are always correct? • Failures should not lead to data loss or incorrect results 67 Source Kafka topic Stream processor

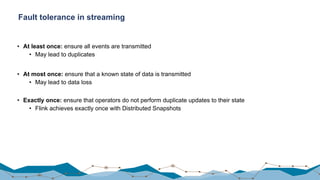

- 68. Fault tolerance in streaming • At least once: ensure all events are transmitted • May lead to duplicates • At most once: ensure that a known state of data is transmitted • May lead to data loss • Exactly once: ensure that operators do not perform duplicate updates to their state • Flink achieves exactly once with Distributed Snapshots

- 69. Low Latency Apache Flink Low latency High throughput State handlingWindowing Fault tolerance and correctness

- 70. Yahoo! Benchmark • Count ad impressions grouped by campaign • Compute aggregates over a 10 second window • Emit window aggregates to Redis every second for query 70 Full Yahoo! article: https://yahooeng.tumblr.com/post/135321837876/benchmarking- streaming-computation-engines-at “Storm […] and Flink […] show sub-second latencies at relatively high throughputs with Storm having the lowest 99th percentile latency. Spark streaming 1.5.1 supports high throughputs, but at a relatively higher latency.” (Quote from the blog post’s executive summary)

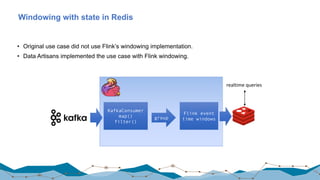

- 71. Windowing with state in Redis • Original use case did not use Flink’s windowing implementation. • Data Artisans implemented the use case with Flink windowing. 71 KafkaConsumer map() filter() group Flink event time windows realtime queries

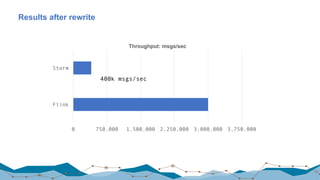

- 72. Results after rewrite 72 0 750,000 1,500,000 2,250,000 3,000,000 3,750,000 Storm Flink Throughput: msgs/sec 400k msgs/sec

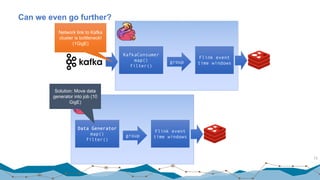

- 73. Can we even go further? 73 KafkaConsumer map() filter() group Flink event time windows Network link to Kafka cluster is bottleneck! (1GigE) Data Generator map() filter() group Flink event time windows Solution: Move data generator into job (10 GigE)

- 74. Results without network bottleneck 74 0 4,000,000 8,000,000 12,000,000 16,000,000 Storm Flink Flink (10 GigE) Throughput: msgs/sec 10 GigE end-to-end 15m msgs/sec 400k msgs/sec 3m msgs/sec

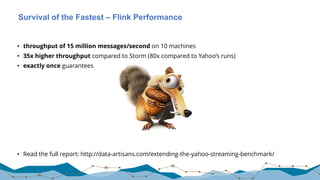

- 75. Survival of the Fastest – Flink Performance • throughput of 15 million messages/second on 10 machines • 35x higher throughput compared to Storm (80x compared to Yahoo’s runs) • exactly once guarantees • Read the full report: http://data-artisans.com/extending-the-yahoo-streaming-benchmark/

- 76. The unbelievable Machine Company GmbH Museumsplatz 1/10/13 1070 Wien Contact: Stefan Papp, Data Architect stefan.papp@unbelievable-machine.com Tel. +43 - 1 - 361 99 77 - 215 Mobile: +43 664 2614367

![Spark SQL

Spark SQL uses DataFrames (Typed Data Containers) for SQL

Hive:

c = HiveContext(sc)

rows = c.sql(“select * from titanic”)

rows.filter(rows[‘age’] > 25).show()

JSON:

c.read.format(‘json’).load(’file:///root/tweets.json”).registerTe

mpTable(“tweets”)

c.sql(“select text, user.name from tweets”)

39

28.01.17](https://image.slidesharecdn.com/20170126bigdataprocessing-170203193510/85/20170126-big-data-processing-39-320.jpg)

![Expressive APIs

51

case class Word (word: String, frequency: Int)

val lines: DataStream[String] = env.fromSocketStream(...)

lines.flatMap {line => line.split(" ")

.map(word => Word(word,1))}

.window(Time.of(5,SECONDS)).every(Time.of(1,SECONDS))

.groupBy("word").sum("frequency")

.print()

val lines: DataSet[String] = env.readTextFile(...)

lines.flatMap {line => line.split(" ")

.map(word => Word(word,1))}

.groupBy("word").sum("frequency")

.print()

DataSet API (batch):

DataStream API (streaming):](https://image.slidesharecdn.com/20170126bigdataprocessing-170203193510/85/20170126-big-data-processing-51-320.jpg)

![Yahoo! Benchmark

• Count ad impressions grouped by campaign

• Compute aggregates over a 10 second window

• Emit window aggregates to Redis every second for query

70

Full Yahoo! article: https://yahooeng.tumblr.com/post/135321837876/benchmarking-

streaming-computation-engines-at

“Storm […] and Flink […] show sub-second latencies at relatively high

throughputs with Storm having the lowest 99th percentile latency.

Spark streaming 1.5.1 supports high throughputs, but at a relatively higher

latency.”

(Quote from the blog post’s executive summary)](https://image.slidesharecdn.com/20170126bigdataprocessing-170203193510/85/20170126-big-data-processing-70-320.jpg)